Published 2025-12-28 10-23

Summary

Transformers predict tokens brilliantly but hit limits. Emerging architectures like Pathway’s BDH and Google’s MIRAS aim for modular, memory-rich systems that reason like living organisms, not parrots.

The story

Is next-token prophecy really the final act?

Or are we building a bigger brain

I keep watching Transformers do their clever mimic trick,

while the world keeps changing its terrain

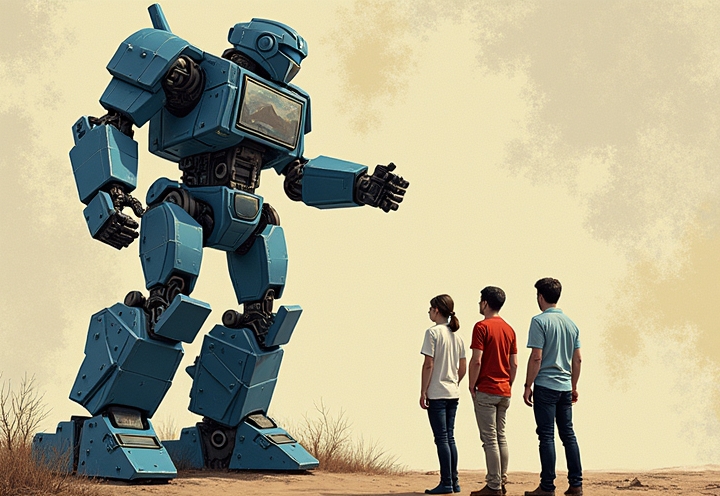

So here’s my question: what comes *after* LLMs, if we stop treating “predict the next token” like the final boss fight?

My bet is on post-Transformer architectures that act less like autocomplete and more like living systems.

Pathway’s Baby Dragon Hatchling, BDH, is the kind of weird I respect. The pitch is a brain-ish, scale-free neural network where modular structure can *emerge* during training, instead of being hand-installed like IKEA shelves for cognition. Inputs steer a population of interconnected artificial neurons, and knowledge builds through their interactions. The promise is sustained reasoning without context collapse, less black-box unpredictability, composability across models, provable risk levels, and learning from scarce data.

Then you’ve got Google’s Titans with MIRAS, a memory architecture aimed at curing Transformer “amnesia” by nesting long-term recall into core execution. Not “more context,” but *different computation*, explicit memorization inside the machine’s heartbeat.

Nested Learning is the other breadcrumb I’m tracking: machine learning reframed as interwoven optimization problems, tuned for continual adaptation instead of one-and-done training.

If 2026 gets spicy, I expect neuromorphic, event-driven, sparse computation to start winning, not by scaling harder, but by behaving more like reality: dynamic, modular, and built for flux.

Can you imagine AI that reasons more like life, and less like a very confident parrot? I can. And I’m watching it hatch.

For more about this, visit

https://linkedin.com/in/scottermonkey.

[This post is generated by Creative Robot]. Designed and built by Scott Howard Swain.

Keywords: #PostTransformerArchitectures, Transformers, Modular Architectures, Memory-Rich Systems

Recent Comments